The EU AI Act is not light bedtime reading. It has over one hundred and fifty pages packed with legal jargon. It covers everything from defining "input data" to laying out the documentation rules for high-risk AI systems. And, like getting lost in a dense forest, it is easy to miss the bigger picture when you are buried in the details.

Context is Everything

To properly gain a more nuanced understanding of the regulatory efforts, it helps to trace through its history. Do not worry, we are not going to make you sift through six years of documents. Instead, we will break it down for you, showing how the framers of the regulations saw their work within the larger context.

Impact on Regulation

The framework for trustworthy AI released by the high level expert group has greatly influenced the EU AI Act. The legal text explicitly references the seven requirements for trustworthy AI, which although non-binding, has nevertheless informed many of the concepts and jargon that feature directly in the legislation. This is especially true of high risk AI systems when it pertains to human oversight, transparency and technical robustness obligations as is evident from some excerpts from the actual Act:

"Requirements should apply to high-risk AI systems as regards risk management, the quality and relevance of data sets used, technical documentation and record-keeping, transparency and the provision of information to deployers, human oversight, and robustness, accuracy and cybersecurity. Those requirements are necessary to effectively mitigate the risks for health, safety and fundamental rights"

"High-risk AI systems shall be designed and developed in such a way that they achieve an appropriate level of accuracy, robustness, and cybersecurity, and that they perform consistently in those respects throughout their lifecycle"

"Human oversight shall aim to prevent or minimise the risks to health, safety or fundamental rights that may emerge when a high-risk AI system is used in accordance with its intended purpose or under conditions of reasonably foreseeable misuse, in particular where such risks persist despite the application of other requirements set out in this Section"

Understanding the framework will give you a good grounding of the EU AI Act and some of its underlying principles.

An Ecosystem of Trust

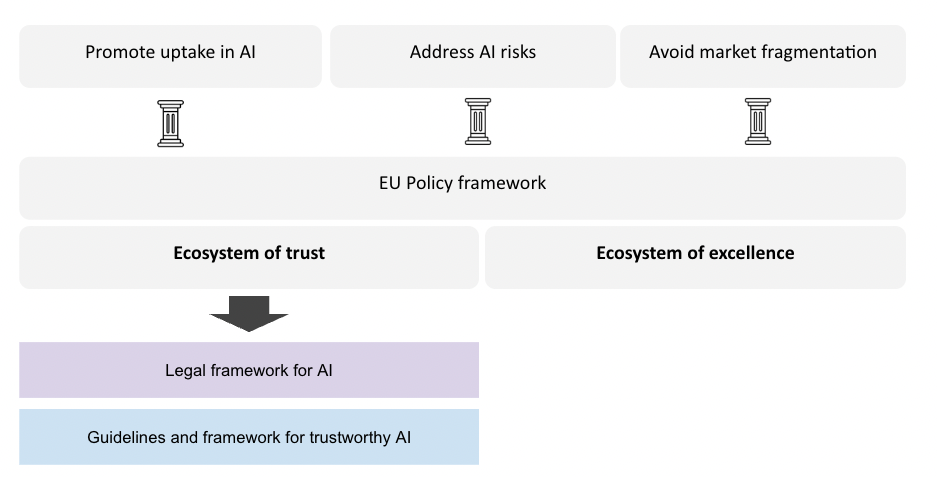

The European Commission, between 2018 and 2020, identified two key policy objectives in order to increase the uptake of AI, address AI risks, and avoid market fragmentation. These consisted of establishing common EU policies in order to establish:

- An ecosystem of trust in AI

- An ecosystem of excellence in AI

The ecosystem of trust in turn, reasoned the European Commission, required a clear EU wide legal framework for AI as well as guidelines and framework for development of trustworthy AI. We will deconstruct both today.

Legal Framework for AI

The push to create a comprehensive legal framework for AI came from two main goals: building trust in AI and avoiding market fragmentation in the EU caused by different national laws.

The legal framework was envisaged with three pillars in mind to mitigate the risks of AI as well as provide a redressal mechanism for any harm caused by AI:

- The EU AI Act which provided the rules concerning development and use of AI systems. The Act was conceived as a horizontal framework which would be largely sector agnostic.

- The General Safety Product Regulation (GSPR), while not specific to AI, provided some sector specific guardrails. In later articles we shall see how AI systems have increased calls for certain changes in GSPR but lets keep it simple for now.

- An AI liability directive which is currently in the proposal stage and addresses liability issues related to AI systems.

We will deep dive into the proposed liability directive in our articles on March 6th and 13th - you can find out about it here. A primer on the EU AI Act is planned on 20th March.

Guidelines and Framework for Trustworthy AI

The guidelines and framework for trustworthy AI, initially released by the High Level Expert Group in 2018, is not just useful as a non-binding best practice for organizations, but as noted earlier, has had a significant influence on the legislation itself.

The framework can be divided into three logical parts:

- Part one defines what trustworthy AI is.

- Part two details the seven requirements needed to realize trustworthy AI.

- Part three provides concrete assessment lists to help organizations evaluate their readiness in meeting the requirements.

Defining Trustworthy AI

Trustworthy AI is defined by the framework as AI which was lawful, ethical and robust:

Lawful

Complies with all applicable laws and regulations. There are many levels of laws governing the EU including EU primary laws, secondary laws and laws of the individual member states.

Ethical

Adheres to ethical principles and values.

Robust

Confidence that AI systems will not cause unintentional harm and perform in a safe, secure and reliable manner.

Trustworthy AI, the framework notes, is further grounded in ethical principles which include human autonomy, prevention of harm, fairness, and explicability. Explicability here is defined as a means to provide transparency and explainability in decisions and processes of the AI system.

Realizing Trustworthy AI

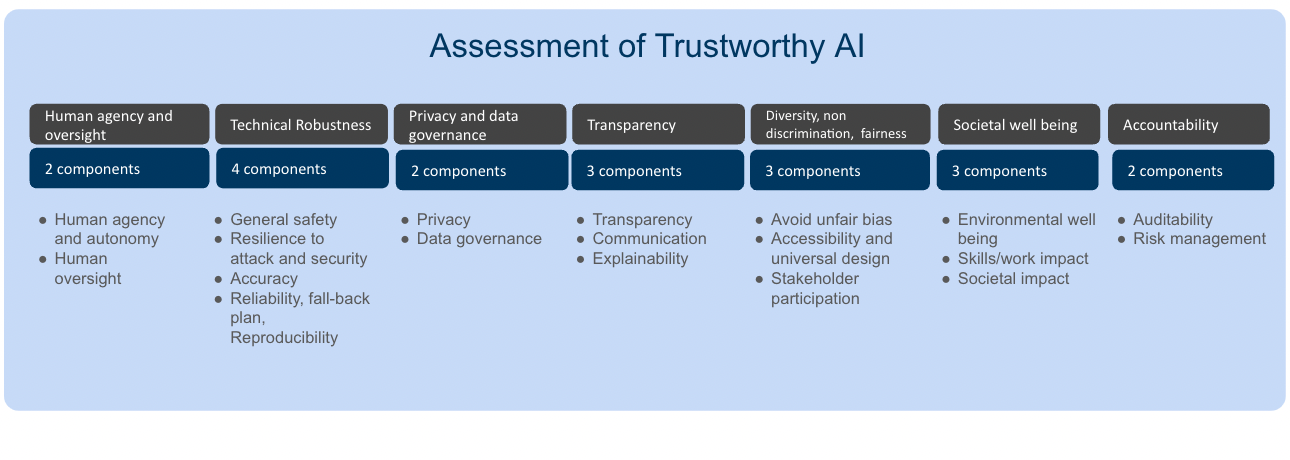

Once trustworthy AI is defined, the framework details how to realize it. Seven key requirements are introduced which together help create trustworthy AI systems:

Human agency and oversight

Enabling meaningful human control. Oversight concepts are made clear such as:

- Human in the loop (HITL): Refers to the capability for human intervention in every decision cycle of the system.

- Human on the loop (HOTL): Capability for human intervention during the design cycle of the system and monitoring the system's operation.

- Human in command (HIC): Capability to oversee the overall activity of the AI system (including its broader economic, societal, legal and ethical impact) and the ability to decide when and how to use the system in any particular situation.

Technical robustness and safety

Ensuring reliability, security, and resilience. This includes resilience to cybersecurity, fallback plan and general safety, accuracy, reliability and reproducibility.

Privacy and data governance

Guaranteeing data protection and integrity. Privacy protection refers not only to collection in AI but also inference. For example, digital records of human behaviour may allow AI systems to infer not only individuals' preferences, but also their sexual orientation, age, gender, religious, or political views.

Transparency

Enhancing traceability and communication. The elements of transparency include traceability, explainability and communication.

Diversity, non-discrimination, and fairness

Which consists of avoiding biases, fostering inclusivity and ensuring stakeholder participation.

Societal and environmental well-being

Addressing broader social and ecological impacts.

Accountability

Implementing mechanisms for oversight, responsibility, and redress. In particular, the AI system should be auditable, report adverse impacts and address tradeoffs in a rational manner.

Assessing Trustworthiness of AI Systems

Finally, the framework addresses how to evaluate readiness for meeting the seven requirements essential for building a trustworthy AI system. The assessment lists around a dozen questions for each requirement which are further broken down into its constituent components as illustrated below.

Opinion

The framework is useful to understand the evolving regulatory framework but has limited value at an organizational level. If you wish to understand many of the underlying concepts of the EU AI Act and its evolution, it is useful to review the framework. The assessment list also provides some guidance and can act as a starting point for practitioners who are not experienced in AI governance.

From an organizational point of view, however, the framework has limited value; this may well be because it was not intended to be a comprehensive document for organizations but rather a simple scaffolding for regulators. The requirements and especially the assessment list offers limited guidance on how organizations can develop their AI governance frameworks or how they can manage AI risks. To this extent, organizations may find the AI Risk Management Framework by the US National Institute of Standards and Technology more useful which can be found here. Recently, the European Commission and related organizations, such as, Joint Research Centre have made significant efforts to release templates and guides which are helpful to organizations - we will review these at a later date.

We will release a very detailed paper on arXiv regarding AI risk management and AI governance from an organizational perspective in the next week - so keep tuned.

Right-Sizing AI Risk Management: Smarter Strategies for Effective AI Governance

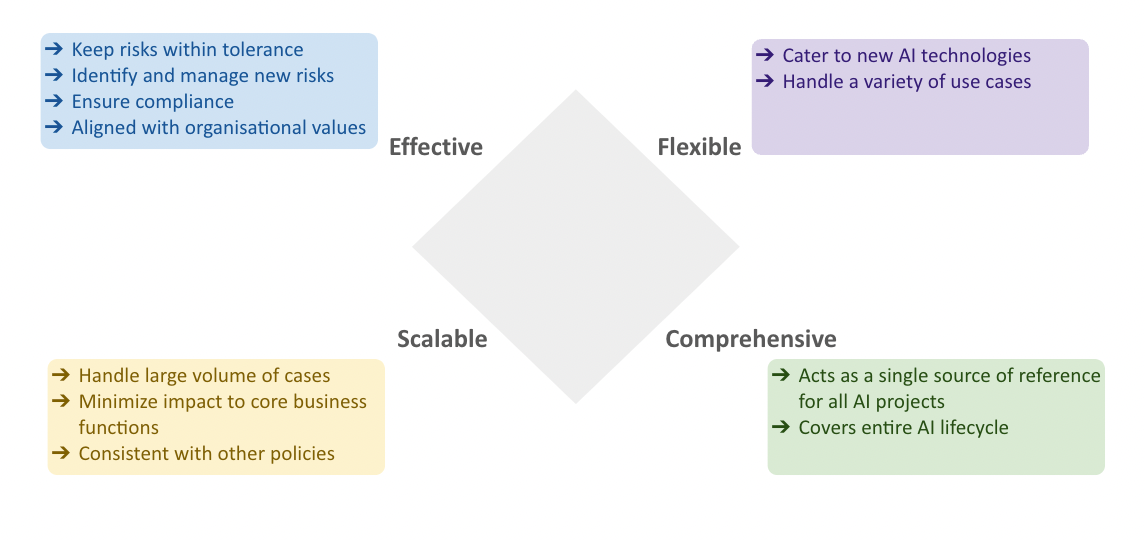

AI Governance frameworks need to be effective, flexible, scalable and comprehensive. Effective to keep risks within tolerance, flexible to cater to new technologies and use cases, scalable so that they can handle a large number and variety of projects without impeding the core business functions of the organization and comprehensive to act as a single reference for risk management of all AI projects. There are inherent tradeoffs within the four characteristics, and navigating them is key to crafting a sustainable AI governance framework.

Importance of Rightsizing AI Risk Management

Right sizing AI risk management helps create scalable and flexible frameworks while maintaining its effectiveness. This is particularly important in the AI context for four reasons:

- The accelerating pace of AI technological change. New algorithms and architectures with novel capabilities and risks are expected to come about with increasing frequency. AI governance frameworks need to be able to address these.

- The rapid commoditization of AI technologies. Third party vendors, libraries and development frameworks are introduced within weeks of new AI algorithms. These trends greatly reduce time to market of new AI applications; coupled with competitive pressures we can expect ever shortening time-to-market cycles for AI driven applications which will place greater demands on governance frameworks to be scalable and not hinder innovation.

- Nature of AI systems and models which require near continuous changes. Whether fine-tuning third party AI models or re-training in-house AI models, AI systems need to be worked on continuously to ensure improvements in performance and alignment.

- The growing application of AI to a wide variety of use cases. Materiality of risk is dependent on a variety of factors including the where and how the AI is applied, the regulatory and legal environment as well as various external circumstances.

A one size fits all approach is difficult to operationalize then given the realities of modern AI systems highlighted above.

Flexibility

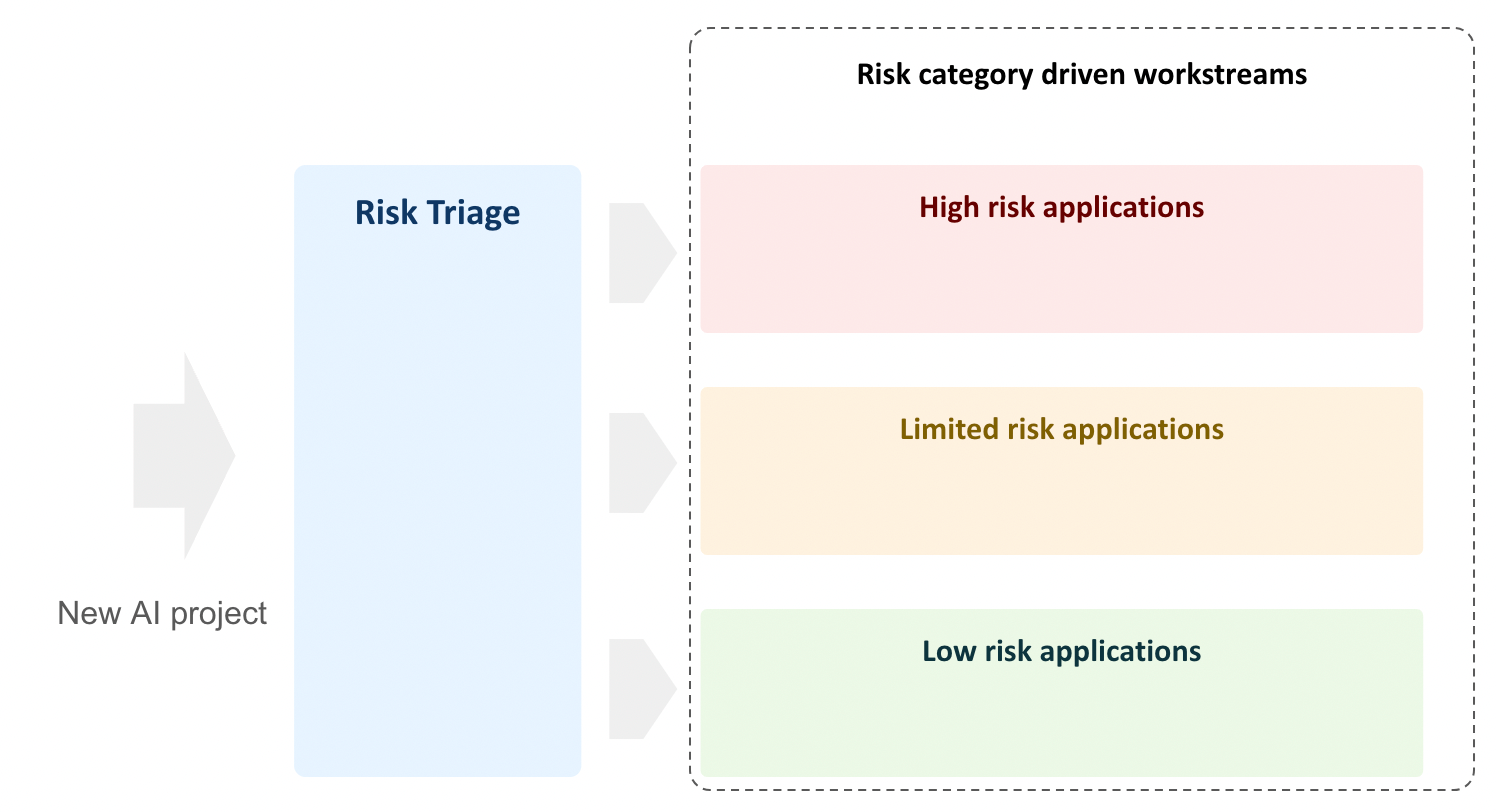

Our paper on AI Governance addresses both flexibility and scalability in detail and draws some inspiration from the work by NIST. In particular we have found it effective to create a two tiered structure which separates the different functions of AI governance. By doing so we can ensure effectiveness of the program by ensuring organization-wide policies are respected, while being flexible in following different workstreams for different use cases based on appropriate risk triage/categorization.

Make Governance AI ready

This first tier fulfills organization wide policy objectives, ensures consistency and alignment with other frameworks and standards and provides high level guidance and prescriptions. Guidelines for application level risk categorization and triage will be part of this tier.

MAP function

Which operationalizes the framework and is part of the risk management lifecycle. This second tier incorporates essential use case specific information which finally helps complete the picture. At this stage, triage and application risk categorization are performed, guiding the subsequent workstreams based on the assigned risk classification.

Scalability

Both risk and business units should have a common aim of ensuring core business functions of the organization are not affected while at the same time ensuring AI risks are kept within tolerance. While the previous horizontal tiering mechanism gave the underlying capability to the organization, "vertical" tiering via a triage process will permit it to address scale.

The application level risk categorization, while aligned with the emerging regulatory landscape, is illustrative and in general a more fit-for-purpose tiering needs to be created. Service levels can be set with escalations built-in, specially for the low and limited risk tiers, to ensure processes do not become bureaucratic and to support business planning.

Takeaways

- Increasingly ubiquitous nature of AI applications, fast paced change in technology, shorter risk management cycles and tiered regulatory obligations - the consensus is that a one size fits all approach to AI governance is not practical for the vast majority of organizations.

- The tradeoffs between competing objectives are also real - approaches which are comprehensive, if not designed properly, can affect the scalability and flexibility of the programs rendering them too governance heavy and stifling innovation - and while they can be mitigated with proper understanding of the system and smarter approaches, they cannot be eliminated. This is especially true of organizations in regulated sectors that need to exercise greater caution.

- Approaches need to align with the emerging regulatory landscape in order to minimize future regulatory and legal risks.

- A tiered approach to risk management, as proposed here, can help better balance the tradeoffs in a more granular and sustainable manner. This also aligns with the emerging regulatory trends.

- Having said all this, nothing beats a solid culture which requires stakeholders to understand and appreciate both AI innovation and risk management.

References

AI Risk Management Framework | NIST. (n.d.). National Institute of Standards and Technology. Retrieved August 21, 2025, from https://www.nist.gov/itl/ai-risk-management-framework

EU AI Act: first regulation on artificial intelligence | Topics. (2025, February 19). European Parliament. Retrieved August 21, 2025, from https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence